Introducing the new differential privacy platform from Microsoft and Harvard’s OpenDP

WRITTEN BY

/en-us/opensource/blog/author/sarah-bird

The code for a new open source differential privacy platform is now live on GitHub. The project is jointly developed by Microsoft and Harvard’s Institute for Quantitative Social Science (IQSS) and the School of Engineering and Applied Sciences (SEAS) as part of the OpenDP initiative. We’ve released the code to give developers around the world the opportunity to leverage expert differential privacy implementations, review and stress test them, and join the community and make contributions.

Insights from datasets have the potential to help solve the most difficult societal problems in health, the environment, economics, and other areas. Unfortunately, because many datasets contain sensitive information, legitimate concerns about compromising privacy currently prevent the use of potentially valuable stores of data. Differential privacy makes it possible to extract useful insights from datasets while safeguarding the privacy of individuals.

What is differential privacy?

Pioneered by Microsoft Research and their collaborators, differential privacy is the gold standard of privacy protection. Conceptually, differential privacy uses two steps to achieve privacy benefits.

First, a small amount of statistical noise is added to each result to mask the contribution of individual datapoints. The noise is significant enough to protect the privacy of an individual, but still small enough that it will not materially impact the accuracy of the answers extracted by analysts and researchers.

Then, the amount of information revealed from each query is calculated and deducted from an overall privacy-loss budget, which will stop additional queries when personal privacy may be compromised. This can be thought of as a built-in shutoff switch in place that prevents the system from showing data when it may begin compromising someone’s privacy.

Deploying differential privacy

Differential privacy has been a vibrant area of innovation in the academic community since the original publication. However, it was notoriously difficult to apply successfully in real-world applications. In the past few years, the differential privacy community has been able to push that boundary and now there are many examples of differential privacy in production. At Microsoft, we are leveraging differential privacy to protect user privacy in several applications, including telemetry in Windows, advertiser queries in LinkedIn, suggested replies in Office, and manager dashboards in Workplace Analytics.

Differential privacy platform

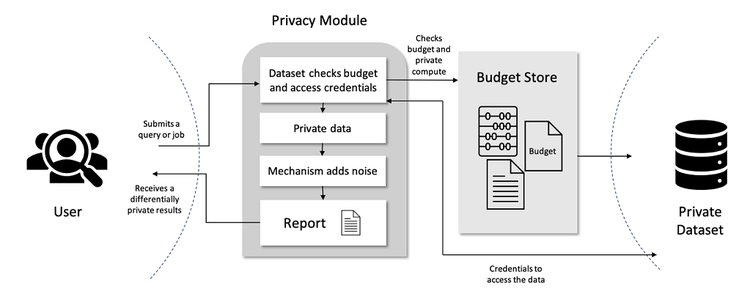

This project aims to connect theoretical solutions from the research community with the practical lessons learned from real-world deployments, to make differential privacy broadly accessible. The system adds noise to mask the contribution of any individual data subject and thereby provide privacy.

It is designed as a collection of components that can be flexibly configured to enable developers to use the right combination for their environments.

In the Core, we provide a pluggable open source library of differentially private algorithms and mechanisms with implementations based on mature differential privacy research. The library provides a fast, memory-safe native runtime. In addition, we also provide the APIs for defining an analysis and a validator for evaluating these analyses and composing the total privacy loss on a dataset. The runtime and validator are built in Rust, while Python support is available and R support is forthcoming.

The analysis graph gives fine-grained control over the privacy-loss budget (epsilon). In this example, the analyst has chosen to distribute an epsilon of 1.0 unequally distributed across a mean and variance statistic.

```python

with wn.Analysis() as analysis:

# load data

data

=

wn.Dataset(path

=

data_path, column_names

=

var_names)

# get mean of age

age_mean

=

wn.dp_mean(data

=

wn.cast(data[

'age'

],

"FLOAT"

),

privacy_usage

=

{

'epsilon'

: .

65

},

data_lower

=

0.

,

data_upper

=

100.

,

data_n

=

1000

)

# get variance of age

age_var

=

wn.dp_variance(data

=

wn.cast(data[

'age'

],

"FLOAT"

),

privacy_usage

=

{

'epsilon'

: .

35

},

data_lower

=

0.

,

data_upper

=

100.

,

data_n

=

1000

)

analysis.release()

print

(

"DP mean of age: {0}"

.

format

(age_mean.value))

print

(

"DP variance of age: {0}"

.

format

(age_var.value))

print

(

"Privacy usage: {0}"

.

format

(analysis.privacy_usage))

```

The results will look something like:

```

DP mean of age:

44.55598845931517

DP variance of age:

231.79044646429134

Privacy usage: approximate {

epsilon:

1.0

}

```

The platform handles managing the budget for each query and adding the appropriate amount of noise based budget. Now the analysts have a differentially private mean and variance to use.

In the System, we provide system components to make it easier to interface with your data systems, including a SQL query dialect and connections to many common data sources, such as PostgreSQL, SQL Server, Spark, Presto, Pandas, and CSV files. Conceptually, the SQL support permits composition of analysis graphs using a subset of SQL-92.

reader = SparkReader(spark) private = PrivateReader(meta, reader, 1.0) result = reader.execute_typed(‘SELECT AVG(age), VAR(age) FROM PUMS’)

In the Samples, we provide example code and notebooks to demonstrate the use of the platform, teach the properties of differential privacy, and highlight some of the nuances of the implementation.

The platform currently supports scenarios where the data user is trusted by the data owner and wants to ensure that their releases or publications do not compromise privacy. Future releases will focus on hardened scenarios where the researcher or analyst is untrusted and does not have access to the data directly.

Developing the future together

With the basic design and implementation in place, we welcome contributions from developers and others to help build and support the platform going forward. We believe an open approach will ensure trust in the platform and know that contributions from developers and researchers from organizations around the world will be essential in maturing the technology and enabling widespread use of differential privacy in the future.

- Learn more about differential privacy

- Get the open source code

- Get started here

- Use the platform on Azure Machine Learning

Questions or feedback? Please let us know in the comments below.