Hyperlight Nanvix: POSIX support for Hyperlight Micro-VMs

The Cloud Native Computing Foundation’s (CNCF) Hyperlight project delivers faster, more secure, and smaller workload execution to the cloud-native ecosystem.

CloudSkew is a free online diagram editor that helps you draw cloud architecture diagrams. CloudSkew diagrams can be securely saved to the cloud and icons for AWS, Microsoft Azure, Google Cloud Platform, Kubernetes, Alibaba Cloud, Oracle Cloud (OCI), and more are included.

CloudSkew is currently in public preview and the full list of features and capabilities can be seen here, as well as sample diagrams here. In this post, we’ll review CloudSkew’s building blocks, as well as discuss the lessons learned, key decisions, and trade-offs made in developing the editor.

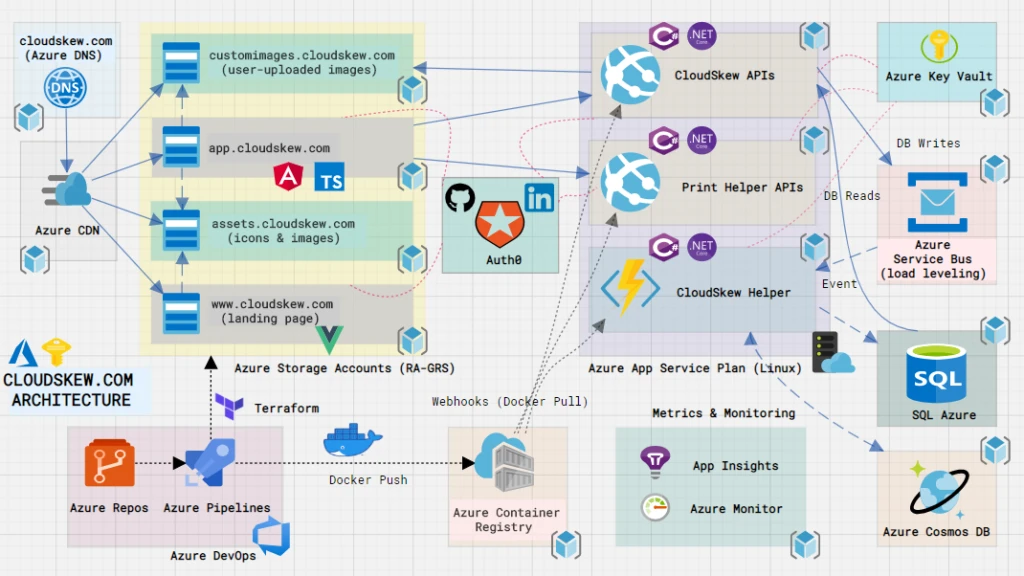

CloudSkew’s infrastructure is built on several Azure services, pieced together like LEGO blocks. Let’s review the individual components below.

At its core, CloudSkew’s front-end consists of two web apps:

The back-end consists of two web API apps, both authored using ASP.NET Core 3.1:

Using ASP.NET Core’s middleware, we ensure that:

The web APIs are stateless and operate under the assumption that they can be restarted or redeployed any time. No sticky sessions and affinities, no in-memory state, and all state is persisted to databases using EF Core (an ORM).

Separate DTO/REST and DBContext/SQL models are maintained for all entities, with AutoMapper rules being used for conversions between the two.

Auth0 is used as the (OIDC compliant) identity platform for CloudSkew. Users can login via GitHub or LinkedIn. The handshake with these identity providers is managed by Auth0 itself. Using implicit flow, ID, and access tokens (JWTs) are granted to the diagram editor app. The Auth0.JS SDK makes all this very easy to implement. All calls to the back-end web APIs use the access token as the bearer.

Auth0 creates and maintains the user profiles for all signed-up users. Authorization/RBAC is managed by assigning Auth0 roles to these user profiles. Each role contains a collection of permissions that can be assigned to the users (they show up as custom claims in the JWTs).

Auth0 rules are used to inject custom claims in the JWT and whitelist/blacklist users.

Azure SQL Database is used for persisting user data, primarily for Diagram, DiagramTemplate, and UserProfile. User credentials are not stored in CloudSkew’s database (that part is handled by Auth0). User contact details like emails are MD5 hashed.

Because of CloudSkew’s auto-save feature, updates to the Diagram table happens very frequently. Some steps have been taken to optimize this:

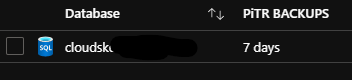

For the preview version, the Azure SQL SKU being used in production is Standard/S0 with 20 DTUs (single database). Currently, the database is only available in one region. Auto-failover groups and active geo-replication (read-replicas) are not currently being used.

Azure SQL’s built-in geo-redundant database backups offer weekly full database backups, differential DB backups every 12 hours, and transaction log backups every five to 10 minutes. Azure SQL internally stores the backups in RA-GRS storage for seven days. RTO is 12 hours and RPO is 1 hour. Perhaps less than ideal, but we’ll look to improve this once CloudSkew’s usage grows.

Azure CosmosDB‘s usage is purely experimental at this point, mainly for the analysis of anonymized, read-only user data in graph format over gremlin APIs. Technically speaking, this database can be removed without any impact to user-facing features.

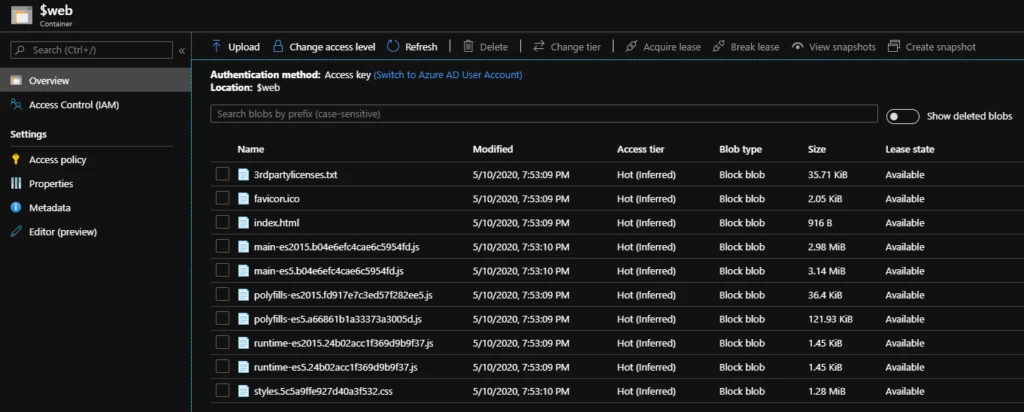

Two Azure Storage Accounts are provisioned for hosting the front-end apps: landing page and diagram editor. The apps are served via the $web blob containers for static sites.

Two more storage accounts are provisioned for serving the static content (mostly icon SVGs) and user-uploaded images (PNG, JPG files) as blobs.

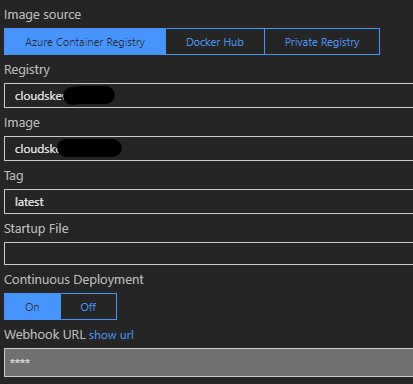

Two Azure App Services on Linux are also provisioned for hosting the containerized back-end web APIs. Both app services share the same App Service Plan

B1 (100 ACU, 1.75 GB Mem) plan, which don’t include automatic horizontal scale-outs, which are scale-outs that need to be done manually).Always On settings have been enabled.

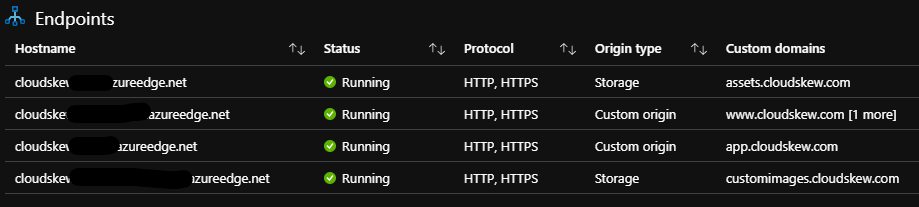

An Azure CDN profile is provisioned with four endpoints, the first two using the hosted front-end apps (landing page and diagram editor) as origins and the other two pointing to the storage accounts (for icon SVGs and user-uploaded images).

In addition to caching at global POPs, content compression at POPs is also enabled.

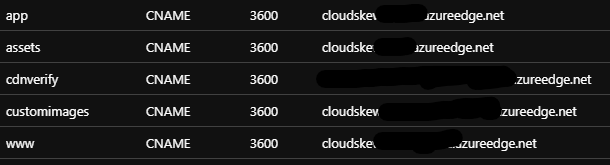

All CDN endpoints have <subdomain>.cloudskew.com custom domain hostnames enabled on them. This is facilitated by using Azure DNS to create CNAME records that map <subdomain>.cloudskew.com to their CDN endpoint counterparts.

Custom domain HTTPS is enabled and the TLS certificates are managed by Azure CDN itself. HTTP-to-HTTPS redirection is also enforced via CDN rules.

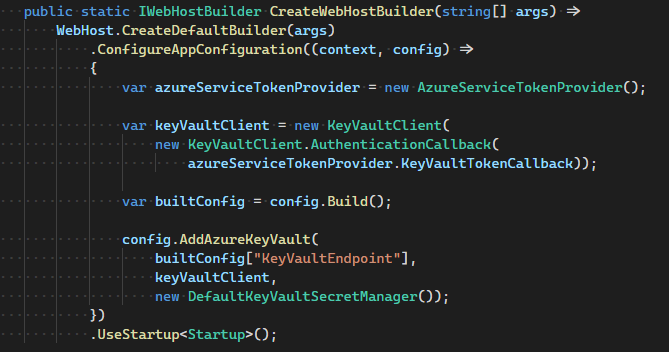

Azure Key Vault is used as a secure, external, central key-value store. This helps decouple back-end web API apps from their configuration settings.

The web API apps have managed identities, which are RBAC’ed for Key Vault access. Also, the web API apps self-bootstrap by reading their configuration settings from the Key Vault at startup. The handshake with the Key Vault is facilitated using the Key Vault Configuration Provider.

Even after debouncing calls to the API, the volume of PUT (UPDATE) requests generated by auto-save feature causes the Azure SQL Database’s DTU consumption to spike, resulting in service degradation. To smooth out this burst of requests, an Azure Service Bus is used as an intermediate buffer. Instead of writing directly to the database, the web API instead queues up all PUT requests into the service bus to be drained asynchronously later.

An Azure Function app is responsible for serially dequeuing the brokered messages off the service bus, using the service bus trigger. Once the function receives a peek-locked message, it commits the PUT (UPDATE) to the Azure SQL database. If the function fails to process any messages, the messages automatically get pushed onto the service bus’s dead-letter queue. When this happens, an Azure monitor alert is triggered.

The Azure Function app shares the same app service plan as the back-end web APIs, using the dedicated app service plan instead of the regular consumption plan. Overall this queue-based load-leveling pattern has helped plateau the database load.

The Application Insights SDK is used by the diagram editor (front-end Angular SPA) as an extensible Application Performance Management (APM) to better understand user needs. For example, we’re interested in tracking the names of icons that the users couldn’t find in the icon palette (via the icon search box). This helps us add frequently-searched icons in the future.

App Insight’s custom events help us log the data and KQL queries are used to mine the aggregated data. The App Insight SDK is also used for logging traces. The log verbosity is configured via app config (externalized config using Azure Key Vault).

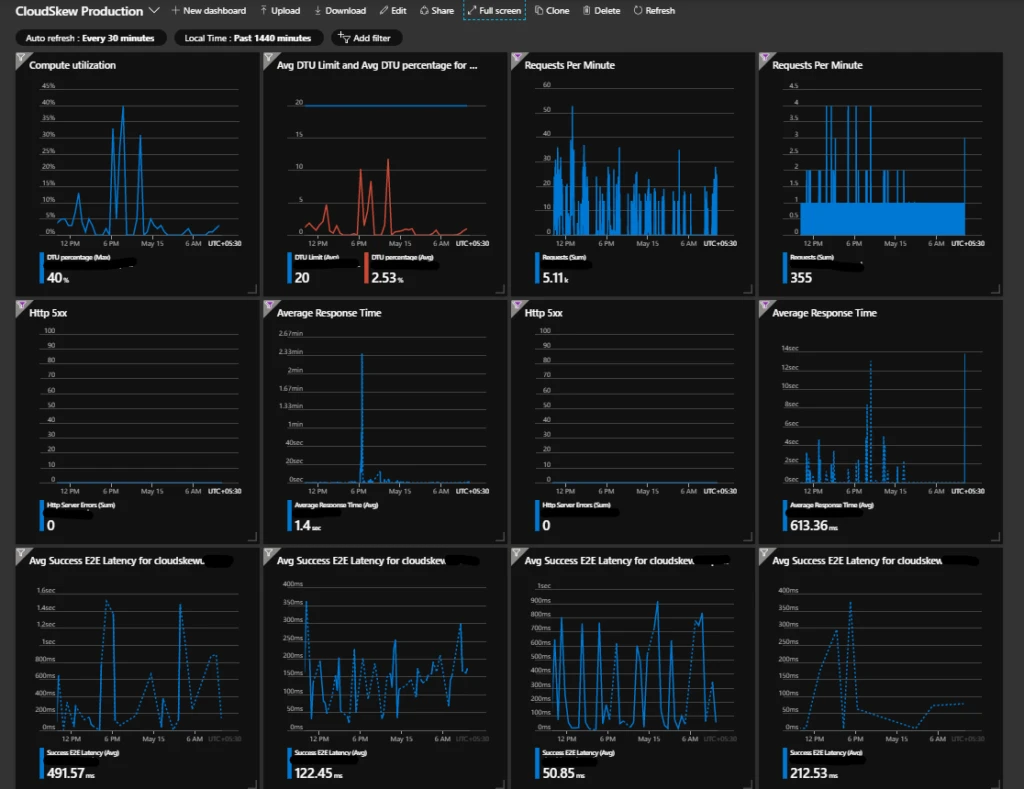

Azure Portal Dashboards are used to visualize metrics from the various Azure resources deployed by CloudSkew.

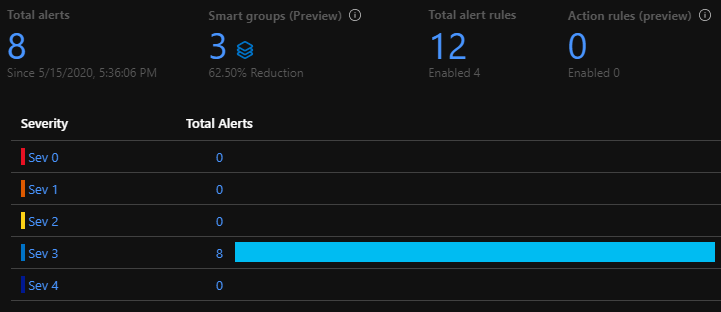

Azure Monitor’s metric-based alerts are being used to get incident notifications over email and Slack. Some examples of conditions that trigger alerts:

Metrics are evaluated and sampled at 15-minute frequency with 1-hour aggregation windows.

Note: Currently, 100% of the incoming metrics are sampled. Over time, as usage grows, we’ll start filtering out outliers at P99.

Terraform scripts are used to provision all of the Azure resources and services shown in the architecture diagram (e.g., storage accounts, app services, CDN, DNS zone, container registry, functions, SLQ server, service bus). Use of Terraform allows us to easily achieve parity in development, test, production environments. Although these three environments are mostly identical clones of each other, there are minor differences:

Note: The Auth0 tenant has been set up manually since there are no terraform providers for it. However it looks like it might be possible to automate the provisioning using Auth0’s Deploy CLI.

Note: CloudSkew’s provisioning script are being migrated from terraform to pulumi . This article will be updated as soon as the migration is complete.

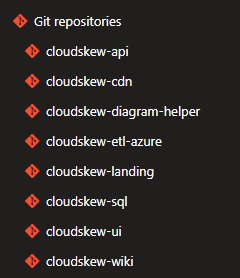

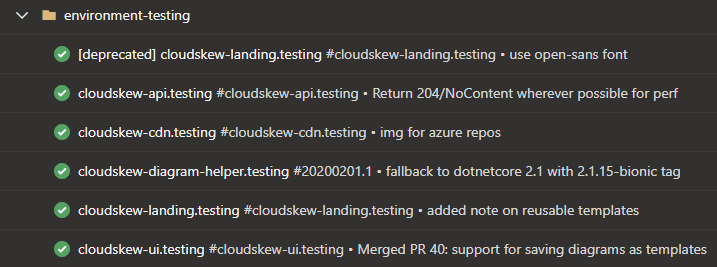

The source code is split across multiple private Azure Repos. The “one repository per app” rule of thumb is applied here. An app is deployed to dev, test, and production prod environments from the same repo.

Feature development and bug fixes happen in private or feature branches, which are ultimately merged into master branches via pull requests.

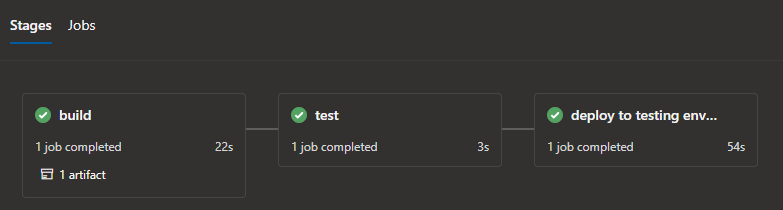

Azure Pipelines are used for continuous integration (CI). Check-ins are built, unit tested, packaged, and deployed to the test environment. CI pipelines are automatically triggered both on pull request creation, as well as check-ins to master branches.

The pipelines are authored in YAML and executed on Microsoft-hosted Ubuntu agents.

Azure Pipelines’ built-in tasks are heavily leveraged for deploying changes to Azure app services, functions, storage accounts, container registry, etc. Access to azure resource is authorized via service connections.

The deployment and release process is very simple (blue-green deployments, canary deployments, and feature flags are not being used). Check-ins that pass the CI process become eligible for release to the production environment.

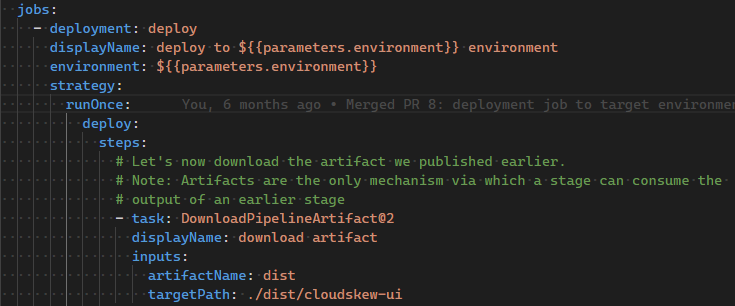

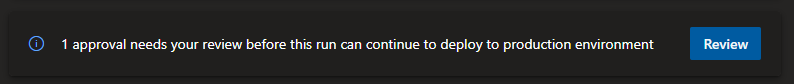

Azure Pipelines deployment jobs are used to target the releases to production environments.

Manual approvals are used to authorize the releases.

As more features are added and usage grows, some architectural enhancements will be evaluated:

Again, any of these enhancements will ultimately be need-driven.

CloudSkew is in very early stages of development and here are the basic guidelines:

Please email us with questions, comments, or suggestions about the project. You can also find a video version of this overview here. Happy diagramming!