ONNX Runtime Web—running your machine learning model in browser

WRITTEN BY

/en-us/opensource/blog/author/emma-ning

A glance at ONNX Runtime (ORT)

ONNX Runtime is a high-performance cross-platform inference engine to run all kinds of machine learning models. It supports all the most popular training frameworks including TensorFlow, PyTorch, SciKit Learn, and more. ONNX Runtime aims to provide an easy-to-use experience for AI developers to run models on various hardware and software platforms. Beyond accelerating server-side inference, ONNX Runtime for Mobile is available since ONNX Runtime 1.5. Now ORT Web is a new offering with the ONNX Runtime 1.8 release, focusing on in-browser inference.

In-browser inference with ORT Web

Running machine-learning-powered web applications in browsers has drawn a lot of attention from the AI community. It is challenging to make native AI applications portable to multiple platforms given the variations in programming languages and deployment environments. Web applications can easily enable cross-platform portability with the same implementation through the browser. Additionally, running machine learning models in browsers can accelerate performance by reducing server-client communications and simplify the distribution experience without needing any additional libraries and driver installations.

How does it work?

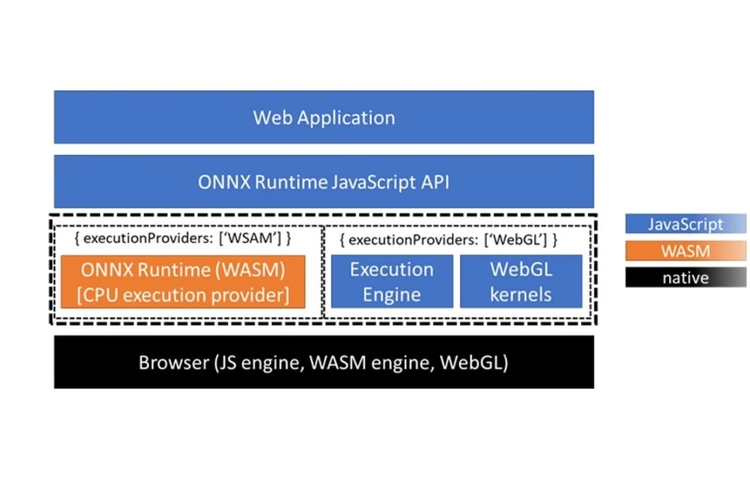

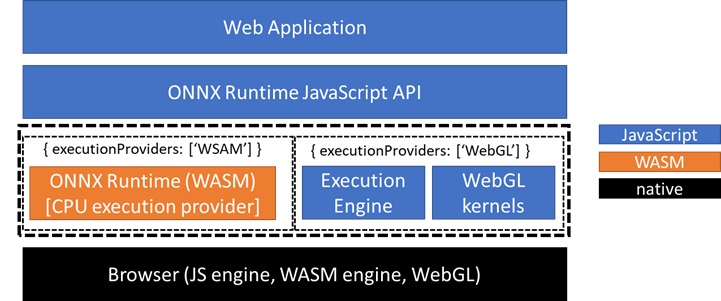

ORT Web accelerates model inference in the browser on both CPUs and GPUs, through WebAssembly (WASM) and WebGL backends separately. For CPU inference, ORT Web compiles the native ONNX Runtime CPU engine into the WASM backend by using Emscripten. WebGL is a popular standard for accessing GPU capabilities and adopted by ORT Web for achieving high performance on GPUs.

WebAssembly (WASM) backend for CPU

WebAssembly allows you to use server-side code on the client-side in the browser. Before WebAssembly only JavaScript was available in the browser. There are some advantages of WebAssembly compared to JavaScript such as faster load time and execution efficiency. Furthermore, WebAssembly supports multi-threading by utilizing SharedArrayBuffer, Web Worker, and SIMD128 (128-bits Single Instruction Multiple Data) to accelerate bulk data processing. This makes WebAssembly an attractive technique to execute the model at near-native speed on the web.

We leverage Emscripten, an open-source compiler toolchain, to compile ONNXRuntime C++ code into WebAssembly so that they can be loaded in browsers. This allows us to reuse the ONNX Runtime core and native CPU engine. By doing that ORT Web WASM backend can run any ONNX model and support most functionalities native ONNX Runtime offers, including full ONNX operator coverage, quantized ONNX model, and mini runtime. We utilize multi-threading and features in WebAssembly to further accelerate model inferencing. Note that SIMD is a new feature and isn’t yet available in all browsers with WebAssembly support. The browsers supporting new WebAssembly features could be found on the webassembly.org website.

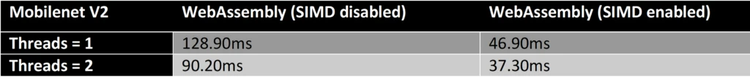

During initialization, ORT Web checks the capabilities of the runtime environment to detect whether multi-threading and SIMD features are available. If not, there is a fallback version based on the environment. Taking Mobilenet V2 as an example, the CPU inference performance can be accelerated by 3.4x with two threads together with SIMD enabled, comparing the pure WebAssembly without enabling these two features.

WebGL backend for GPU

WebGL is a JavaScript API that conforms to OpenGL ES 2.0 standard, which is supported by all major browsers and on various platforms including Windows, Linux, macOS, Android, and iOS. The GPU backend of ORT Web is built on WebGL and works with a variety of supported environments. This enables users to seamlessly port their deep learning models across different platforms.

In addition to portability, the ORT WebGL backend offers superior inference performance by deploying the following optimizations: pack mode, data cache, code cache, and node fusion. Pack mode reduces up to 75 percent memory footprint while improving parallelism. To avoid creating the same GPU data multiple times, ORT Web reuses as much GPU data (texture) as possible. WebGL uses OpenGL Shading Language (GLSL) to construct shaders to execute GPU programs. However, shaders must be compiled at runtime, introducing unacceptably high overhead. The code cache addresses this issue by ensuring each shader will be compiled only once. WebGL backend is capable of quite a few typical node fusions and has plans to take advantage of the graph optimization infrastructure to support a large collection of graph-based optimizations.

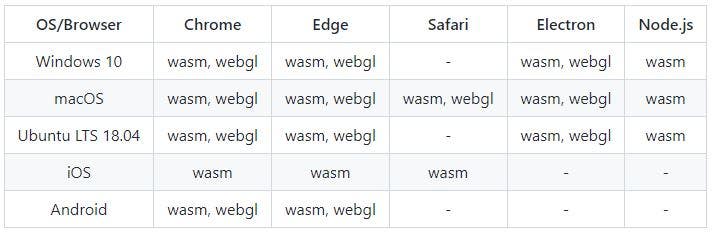

All ONNX operators are supported by the WASM backend but a subset by the WebGL backend. You can get supported operators by each backend. And below are the compatible platforms that each backend supports in ORT Web.

Get started

In this section, we’ll show you how you can incorporate ORT Web to build machine-learning-powered web applications.

Get an ONNX model

Thanks to the framework interoperability of ONNX, you can convert a model trained in any framework supporting ONNX to ONNX format. Torch.onnx.export is the built-in API in PyTorch for model exporting to ONNX and Tensorflow-ONNX is a standalone tool for TensorFlow and TensorFlow Lite to ONNX model conversion. Also, there are various pre-trained ONNX models covering common scenarios in the ONNX Model Zoo for a quick start.

Inference ONNX model in the browser

There are two ways to use ORT-Web, through a script tag or a bundler. The APIs in ORT Web to score the model are similar to the native ONNX Runtime, first creating an ONNX Runtime inference session with the model and then running the session with input data. By providing a consistent development experience, we aim to save time and effort for developers to integrate ML into applications and services for different platforms through ONNX Runtime.

The following code snippet shows how to call ORT Web API to inference a model with different backends.

const ort = require('onnxruntime-web');

// create an inference session, using WebGL backend. (default is 'wasm')

const session = await ort.InferenceSession.create('./model.onnx', { executionProviders: ['webgl'] });

…

// feed inputs and run

const results = await session.run(feeds);

Figure 4: Code snippet of ORT Web APIs.

Some advanced features can be configured via setting properties of object `ort.env`, such as setting the maximum thread number and enabling/disabling SIMD.

// set maximum thread number for WebAssembly backend. Setting to 1 to disable multi-threads

ort.wasm.numThreads = 1;

// set flag to enable/disable SIMD (default is true)

ort.wasm.simd = false;

Figure 5: Code snippet of properties setting in ORT Web.

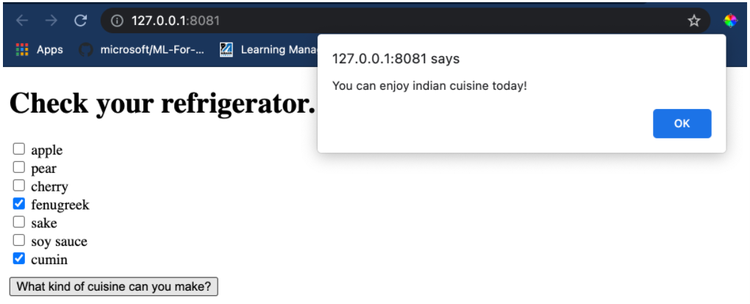

Pre- and post-processing needs to be handled in JS before inputs are fed into ORT Web for inference. ORT Web Demo shows several interesting In-Browser vision scenarios powered by image models with ORT Web. You can find the code source including image input processing and inference through ORT Web. Another E2E tutorial is created by the Cloud Advocate curriculum team about building a Cuisine Recommender Web App with ORT Web. It goes through exporting a Scikit-Learn model to ONNX as well as running this model with ORT Web using script tag.

Looking forward

We hope this has inspired you to try out ORT Web in your web applications. We would love to hear your suggestions and feedback. You can participate or leave comments in our GitHub repos (ONNX Runtime). We continue to work on and improve the performance, model coverage as well as adding new features. On-device training is another interesting possibility we want to research for ORT Web. Stay tuned for our updates.