Hyperlight Nanvix: POSIX support for Hyperlight Micro-VMs

The Cloud Native Computing Foundation’s (CNCF) Hyperlight project delivers faster, more secure, and smaller workload execution to the cloud-native ecosystem.

Next week is KubeCon North America 2019, but we wanted to give you an early preview of one of the things we’ll be showing. Over the last few years, we’ve been working on tools for the cloud native ecosystem. From Helm and Brigade to Porter and Rudr, each tool we have built is designed to stand on its own. But our vision has always been that the tools could be combined to create things larger than the sum of their parts. In today’s post, I will show how Porter and Brigade can be combined to make a Kubernetes controller for deploying CRDs.

Brigade is a tool for constructing worklows in Kubernetes using JavaScript. It’s a limitless way to build pipelines, whether they’re for CI/CD or for larger scale data processing.

Porter is a cloud installer that helps you create, install and manage bundles based on the CNAB specification. Cloud Native Application Bundles is part of our ongoing efforts to provide a standard way for packaging and deploying cloud native applications.

A moment of inspiration led us to try out an interesting experiment: Could we use Brigade to build a Kubernetes controller, and then use Porter as a backend to that controller. And in so doing, could we create a CNAB controller without writing massive amounts of Go code. At KubeCon, we’re excited to be showing the outcome of this experiment.

In the end, our effort boiled down to two parts:

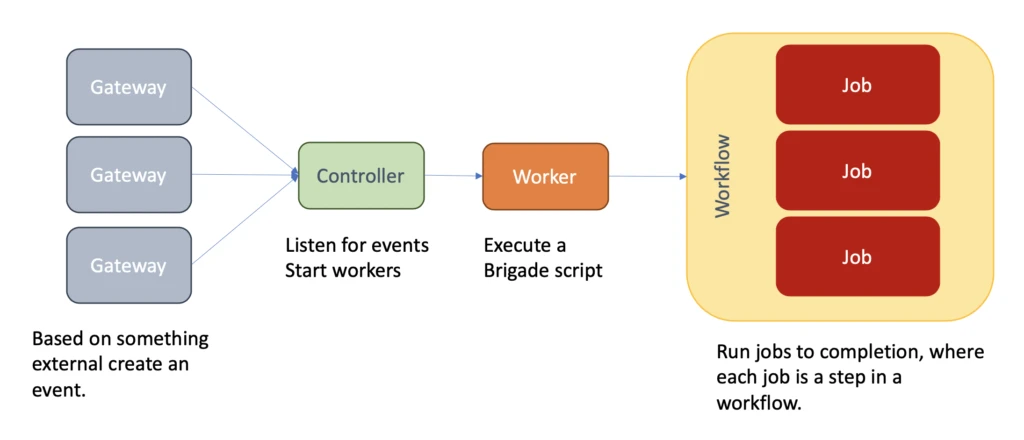

Brigade follows a simple and extensible pattern. It has a central controller that handles executing workflows like this:

The typical Brigade example is its GitHub integration: When a new Pull Request is opened on GitHub, the Brigade GitHub gateway triggers an event. A new worker is created, and the event information is passed to it. The worker then starts up jobs to run unit tests, build binaries, and so on.

We wanted to see if this pattern could be adapted to Kubernetes controllers, which are already centered on the concept of events.

A Kubernetes controller frequently listens for custom resources (as created by Custom Resource Definitions, or CRDs). A custom resource is a special type that is not part of Kubernetes’ core, but is user-defined.

When the Kubernetes API receives a request to work with a custom resource, it sends a notification event:

This kind of event system is very easy to implement in Brigade.

We’ve recently transitioned a lot of our coding efforts from Go to Rust. This project seemed like a great opportunity to showcase how powerful Rust’s Kubernetes libraries are. So with around 100 lines of Rust code, we created a Brigade gateway that can accept a custom resource name and then listen on the Kubernetes event stream for events related to that custom resource. Since it can be used to attach to any Kubernetes resource type, we named it the Brigade Universal Controller for Kubernetes (BUCK). And we built it specifically to be a tool for rapidly building Kubernetes controllers.

When Buck receives an event for its custom resource, it notifies Brigade, which creates a new worker. And that worker is handed the custom resource (in JSON form) as well as the name of the event that triggered it.

Implementing a new controller, then, is as simple as this:

const { events } = require("brigadier");

events.on("resource_added", handle);

events.on("resource_modified", handle);

events.on("resource_deleted", handle);

function handle(e, p) {

let obj = JSON.parse(e.payload); // <-- your Kubernetes object

console.log(obj);

}

The above prints the custom resource that it receives for any Buck event.

To round out the work on Buck, we built a Helm chart that can help you define a custom resource and deploy the appropriate Buck gateway all at once.

With Buck complete, the next step was to write a CNAB controller in JavaScript, writing a more robust tool based on the script above.

Once we were receiving events for our custom resource, all we really wanted to do to implement a CNAB controller was pass the event data to Porter. Then Porter could manage the lifecycle of the bundle.

The first step to accomplishing this goal was to define a Porter action as a Kubernetes custom resource. We ended up with a fairly simple YAML file:

apiVersion: cnab.technosophos.com/v1

kind: Release

metadata:

# This will be used as the name of the install

name: cowsay

spec:

# the bundle to be pulled from an OCI repository

bundle: technosophos/porter-cowsay:latest

# the VALUES to be supplied to parameters defined on the bundle

parameters:

- name: install_message

value: Moooo

- name: uninstall_message

value: Baaaah

credentials:

- name: bogo_token

value: bogo_value

The example above describes a CNAB installation:

cowsaytechnosophos/porter-cowsay:latestinstall_message parameter to Moooouninstall_message parameter to Baaaahbogo_token credential with the value bogo_valueIf the above YAML is created, the appropriate bundle will be installed. When the above YAML is modified and re-submitted, the CNAB installation is upgraded. Of course, when the above is submitted as a deletion, the CNAB is uninstalled.

With that done, it was time to move on to configuring Porter.

Porter is typically executed by users on the command line. But to make it accessible inside of a Brigade job, we needed to pack it inside of a Docker image.

I wanted to make it possible to do more with this gateway in the future, so I made sure to build Porter's mixins as well as add some other useful tools:

FROM docker:dind

ENV HELM_VER 2.12.3

RUN apk add \

ca-certificates bash curl && \

curl https://deislabs.blob.core.windows.net/porter/latest/install-linux.sh | bash && \

mkdir -p /porter

ENV PATH="$PATH:/root/.porter"

WORKDIR /porter

There is one very important feature of the Dockerfile above, though: It uses the base image docker:dind. This is the “Docker in Docker” image. It allows us to execute Docker operations from within a Docker image. Since Porter will be managing the installation of one or more Docker images, we need the Docker in Docker image.

I pushed the image created by that Dockerfile off to Docker Hub, naming it technosophos/porter:latest.

The next step was to write a Brigade script that answered each event by invoking Porter.

brigade.js scriptThe most exciting part of our project was writing a Brigade script that could take the Porter image and execute it each time it received an event from Kubernetes. The resulting script was only about 50 lines long. Here I will break it down into a few chunks and explain what we did.

First, we started by reading the parameters and credentials from the custom resource:

const { events, Job } = require("brigadier");

events.on("resource_added", handle);

events.on("resource_modified", handle);

events.on("resource_deleted", handle);

events.on("resource_error", handle);

function handle(e, p) {

console.log(`buck-porter for ${e.type}`)

let o = JSON.parse(e.payload);

console.log(o);

let args = [];

o.spec.parameters.forEach(pair => {

args.push(`--param ${pair.name}="${pair.value}"`);

});

let creds = [];

o.spec.credentials.forEach(cred => {

creds.push({ name: cred.name, source: { value: cred.value } })

});

let credentials = JSON.stringify({ credentials: creds });

console.log(`Credentials: ${credentials}`);

//...

}

The payload holds the custom resource that we received from Brigade. We parse that, and then look through the parameters and credentials sections to get our configuration data. As a reminder of what data this script is fetching, take a look at the parameters and credentials sections in the YAML:

apiVersion: cnab.technosophos.com/v1

kind: Release

metadata:

# This will be used as the name of the install

name: cowsay

spec:

# the bundle to be pulled from an OCI repository

bundle: technosophos/porter-cowsay:latest

# the VALUES to be supplied to parameters defined on the bundle

parameters:

- name: install_message

value: Moooo

- name: uninstall_message

value: Baaaah

credentials:

- name: bogo_token

value: bogo_value

Next, we needed to figure out which event type had just been triggered, and set the CNAB action accordingly. For example, resource_added needed to be translated to the install action:

const { events, Job } = require("brigadier");

//...

function handle(e, p) {

// ...

let action = "version";

switch (e.type) {

case "resource_added":

action = "install";

break;

case "resource_modified":

action = "upgrade";

break;

case "resource_deleted":

action = "uninstall";

break;

default:

console.log("no error handler registered");

return;

}

// ...

}

At this point, we knew which action to run, and what parameters to send. So in the last step, we just needed to run a Porter job with that information:

const { events, Job } = require("brigadier");

// ...

function handle(e, p) {

// ...

let cmd = `porter ${action} ${o.metadata.name} --tag ${o.spec.bundle} --force ${args.join(" ")} -c buck`;

let porter = new Job("porter-run", "technosophos/porter:latest");

porter.tasks = [

"dockerd-entrypoint.sh &",

"sleep 20",

"mkdir -p /root/.porter/credentials",

"echo $CREDENTIALSET > /root/.porter/credentials/buck.yaml",

`echo ${cmd}`,

cmd

];

porter.privileged = true;

porter.timeout = 1800000; // Assume some bundles will take a long time

porter.cache = {

enabled: true,

size: "20Mi",

path: "/root/.porter/claims"

};

porter.env = {

CREDENTIALSET: credentials

};

return porter.run();

}

Here, we create a new job named porter, using the Porter Docker image we created earlier. We give it a list of tasks to run. In that list, we start the Docker-in-Docker process, create a credentials file, and then run the porter command.

Before we can run the job, we have to set up a few more things:

privileged mode because it needs to execute Docker-in-Docker (which can represent a security risk in a multi-tenant cluster)CREDENTIALSET environment variableWith all of that in place, the final step is to execute porter.run().

As Brigade receives new events for our custom resource, it executes this script and then waits for the script to complete. Brigade’s Kashti UI can be used to watch the status of these events in near real time.

With the completed script, we had a function Kubernetes controller for CNAB written in JavaScript.

There are a couple of things we’d like to do better, and will keep working on:

status object into the custom resource, as many built-in Kubernetes controllers do.brigade.js. That would be a cool way to make sure our CNAB stays up-to-date.We set out to build Buck as a means of rapidly building Kubernetes controllers. And we’ve been asked before whether it would be possible to manage CNAB bundles from within a Kubernetes cluster. So this little project was born of our desire to show how flexible tools can be assembled into interesting higher-order applications with only tiny amounts of code.

While our example isn’t a production-ready solution, we are excited that it demonstrates the first steps in that direction. And we hope that this example inspires others to build their own prototype Kubernetes controllers, as well as use CNAB to package their cloud native applications.

To learn more about Brigade, you can head over to the main site or visit one of our talks at KubeCon (Brigade is an official CNCF project). There is an intro session as well as a deep dive If you would like to use Buck to create your own Kubernetes controller, the GitHub repository is a great place to start.

And to learn more about CNAB, you can start with the specification or by trying the Porter QuickStart.