DocumentDB goes cloud-native: Introducing the DocumentDB Kubernetes Operator

DocumentDB Kubernetes Operator enables you to deploy and manage open-source DocumentDB on Kubernetes. Simplify cloud-native database operations today.

This is the second part of a two-part series introducing you to HashiCorp Consul on Azure. In the first part, we took a look at the service discovery properties of Consul and deployed a Consul cluster in Azure. In this second part, we will discuss properties that turn Consul into a full-blown service mesh solution as of version 1.2 and beyond. We will also look at how to make a variety of infrastructure services in Azure, including Kubernetes, Consul and service mesh-aware.

By definition, a service mesh solution should provide service discovery and runtime configuration management mechanisms for a set of microservices. In Part 1, we saw that Consul has been designed from the beginning to be a service discovery and service configuration management tool. What Consul did not have until version 1.2, however, was the option to transparently (i.e., without application code changes) authorize and secure communication between services. With the introduction of the Connect feature in Consul 1.2, the ability to secure services without making any assumption about the underlying networking infrastructure, completed the core feature set expected in a modern service mesh solution.

Service mesh solutions have been getting a lot of attention as a necessary component of cloud-native infrastructure. And if you are deploying dozens or hundreds of microservices that need to discover and communicate with each other in a secure way, then service mesh is the right tool for that job. Many Azure customers, however, are simply moving monolithic applications into the cloud or developing applications that don’t have the type of east-west (a convention used to refer to service-to-service communication) traffic patterns that service mesh was designed to help with. For the use cases where you have very few microservices present, you don’t have to activate Connect properties of Consul; you can continue using Consul as a pure service discovery and configuration engine.

On the other hand, if you are continuously adding new services into your application and have bumped into the realities of distributed computing at scale with its non-zero latencies, Consul service mesh will help you communicate over unreliable, unsecure networks with ease. Let’s take a look at the architecture that enables Consul to accomplish that and go over configuration changes to enable Connect in the Consul cluster that we deployed in Part 1 of this series.

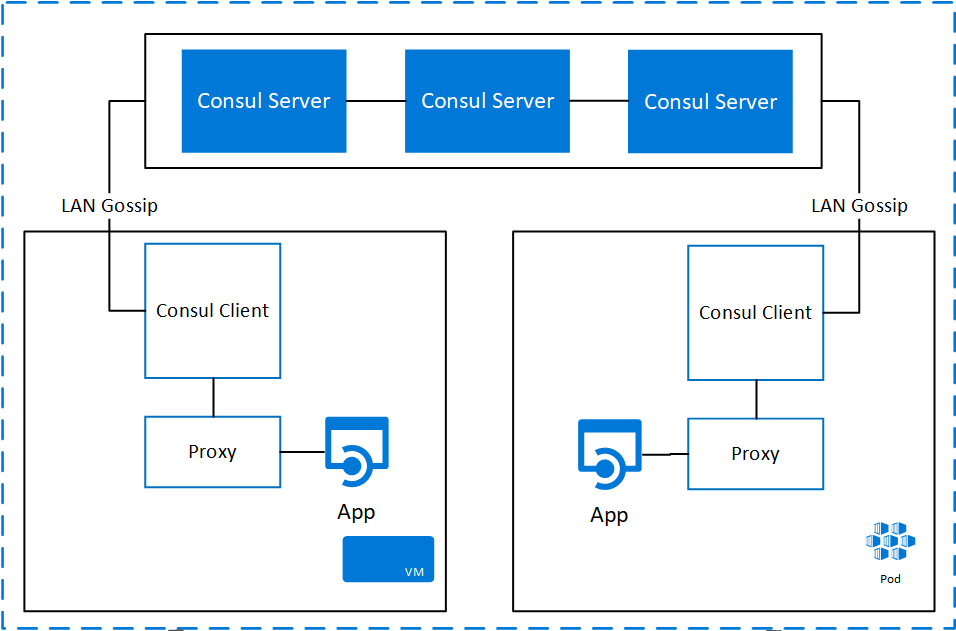

Consul Connect uses proxy sidecars to enable secure inbound and outbound communication without modifying services’ code. In Part 1, you saw a basic Consul architecture diagram (cropped and pasted below). Note the proxy objects next to applications running both on top of Virtual Machines and in an Azure Kubernetes Services cluster. Those proxies are responsible for verifying and authorizing the TLS connections, and then proxying traffic back to a standard TCP connection to the service itself.

The application code for running services never becomes aware of the existence of Connect. Consul Connect can be enabled when the configuration of the Consul agent running that service includes a special “connect” property. Within that property, the service can define upstream dependent services that it needs to communicate with over Connect, and all requests to those services will be authorized and go over secure TLS channels.

For high performance services, Consul provides the option of native application integration to reduce latency by avoiding the overhead of a proxy. This approach, however, requires application code changes.

Now that you have seen how Consul Connect enables secure service communication channels over existing infrastructure, let’s explore Consul agents deployment scenarios into various infrastructure environments in Azure.

In Azure, there are two distinct infrastructure scenarios for deploying and configuring Consul agents. Let’s go over each one of them in detail.

In the first scenario, Consul agents are being deployed on top of Virtual Machines or Virtual Machine Scale Sets in Azure. One approach for automating and easing configuration of Consul agents in Azure is illustrated below:

When configuration changes need to be made to the service, the workflow restarts with DevOps engineers editing config files in GitHub and triggering the build and deploy steps.

In the second scenario, Consul agents are being deployed on top of Azure Kubernetes Service (AKS), a managed Kubernetes offer from Azure. In this case, DevOps engineers would use an official Consul Helm chart for deploying Consul servers and agents (or just agents in case we wanted to join the AKS cluster to the Consul cluster we have already created beforehand):

When configuration changes need to be made to the service, the workflow restarts with DevOps engineers editing Helm chart in GitHub and triggering the rolling update in AKS.

Now that you have both the Consul cluster and a set of services running and configured for Consul Connect, you can define security rules by specifying which services are allowed to talk to each other. Service identity is provided with TLS certificates that are generated and managed by Consul’s built-in certificate authority (CA) or other CA. This identity-based service authorization approach allows you to segment the network without relying on any networking middleware. You can accomplish this segmentation with intentions, which can be defined using the CLI, API or UI. For example, the following intention denies communication from db to web (connections will not be allowed).

$ consul intention create -deny db web

Created: db => web (deny)

In Part 2 of this article series on Consul, you learned about service mesh properties of Consul, their underlying architecture, as well as several ways to deploy and configure Consul agents on Azure. When combined with service discovery and configuration properties, all easy to setup, use, and scale, Consul provides a powerful cloud-native service mesh solution for the modern cloud.

Questions or feedback? Let us know in the comments.